Birdie’s Integration Paths

Understanding Birdie’s Integration Paths

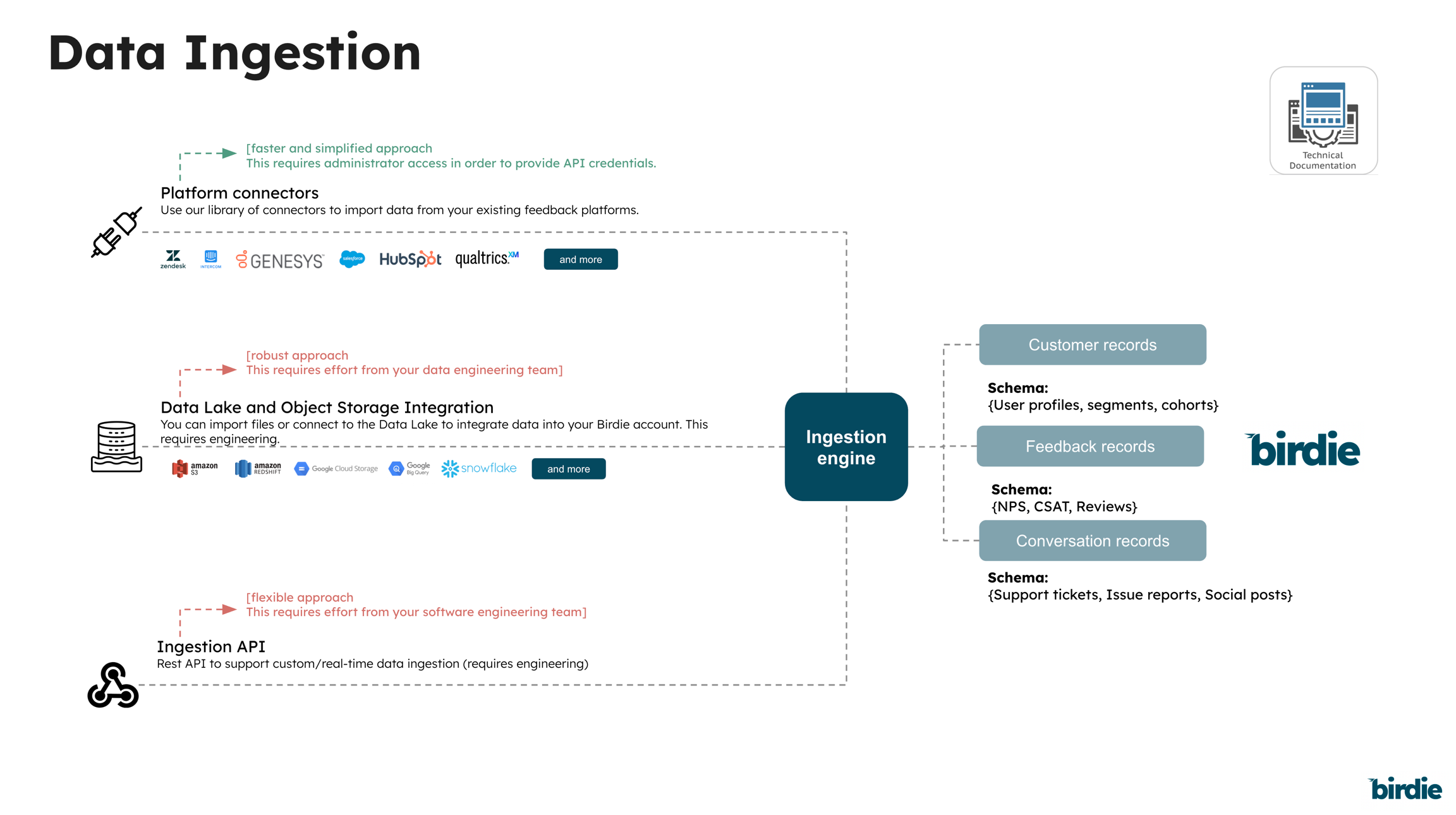

Birdie offers several ingestion methods, each designed for different levels of data control, compliance, and flexibility. Selecting the right option depends on your organization’s data maturity, security policies, and technical capabilities.

Native Connectors

Standard API-based platforms

Feedback systems like Zendesk, Intercom, Salesforce, Qualtrics

Low

Medium

Low

Data Lake / File-based Ingestion

Centralized enterprise data management

Data joins, anonymization, compliance requirements

High

Medium

High

REST Ingestion API

Developer-led organizations

Software-driven ingestion pipelines

Medium–High

Medium

Very High

Custom Solutions (Professional Services)

Unique or multi-source architectures

Orchestration across multiple systems, unsupported APIs

Variable

High

Maximum

When to Choose Each Option

Native Connectors

Choose this when:

Your data resides in well-known, API-accessible SaaS platforms (e.g., Salesforce, Zendesk, Intercom).

You want the fastest and simplest path to integration.

Your security team is comfortable with granting API credentials.

You only need feedback data ingestion, without external data joins or transformations.

Avoid this when:

You need to join or normalize values from multiple platforms.

Your organization enforces strong data residency or anonymization rules.

You require ingestion of versioned or historical customer data.

Example Scenario: A CX team wants to ingest recent survey results from Qualtrics directly into Birdie for sentiment analysis — minimal transformation needed, so a Native Connector is ideal.

Data Lake / File-based Ingestion

Choose this when:

You already centralize your data in systems like BigQuery, Snowflake, or you can easily export data int S3/GCS/Azure.

You need to join multiple data sources before ingestion (e.g., linking feedback to profiles or support tickets).

You must comply with strict PII masking, anonymization, or governance policies.

You require full control over data preparation, schemas, and validation.

Avoid this when:

You lack internal data engineering capabilities or infrastructure to manage pipelines.

Your team cannot maintain recurring data exports.

Example Scenario: An enterprise client merges feedback data from Salesforce and Zendesk into Snowflake, where all PII is hashed. Birdie ingests the final harmonized dataset from Snowflake.

REST Ingestion API

Choose this when:

Your engineering team prefers to build and maintain direct API integrations.

You need event-driven ingestion or fine-grained control over data payloads.

You want to automate custom validations or enrichments before sending data to Birdie.

You need a fully automated, developer-managed ingestion flow.

Avoid this when:

You have limited development resources or short project timelines.

Your data exists mostly in third-party SaaS tools already covered by Birdie’s connectors.

Example Scenario: A software team builds a middleware that listens to internal CRM events and pushes structured JSON payloads to Birdie’s REST API, enabling near real-time ingestion.

Custom Solutions (Professional Services)

Choose this when:

Your ingestion needs don’t fit within any of the standard connector or ingestion models.

You need multi-source orchestration (e.g., combining Zendesk + Salesforce + internal CSV).

You require custom data joins, enrichments, or transformations not supported natively.

The integration involves unsupported authentication or connection methods (e.g., VPN, Kafka, SOAP APIs).

Examples of Custom Needs:

Combining Genesys and Zendesk tickets using ticket_id joins.

Joining Salesforce Account data with Intercom conversations by account_id.

Parsing proprietary file formats or connecting through internal VPNs.

How to Identify When a Custom Solution Is Needed

You can identify custom requirements early by looking for non-standard ingestion behaviors such as:

Multiple source systems must be merged before ingestion

Requires custom orchestration logic

Engage Professional Services

Data must be enriched or joined using custom fields

Field-level mapping exceeds connector defaults

Consider custom transformations

Internal policies prohibit direct API integrations

Security or compliance constraints

Use Data Lake ingestion or custom pipeline

Proprietary or uncommon data source

Unsupported platform or authentication type

Custom connector or file automation

How to Size and Scope a Custom Solution

Properly sizing your ingestion project helps estimate delivery time, effort, and cost accurately. Birdie uses three size tiers (S, M, L) across all services. Below are guidelines to help you classify your custom requirement.

Small (S)

Scope: Single data source or one new connector.

Complexity: Minimal transformation (renaming, basic filtering).

Effort: 20–40 hours.

Example: Ingesting a new CSV export into Birdie with standardized columns.

Medium (M)

Scope: Two connected data sources or moderate transformation.

Complexity: Requires joins or conditional field normalization.

Effort: 40–80 hours.

Example: Matching Zendesk and Salesforce data using shared account_id.

Large (L)

Scope: Three or more source platforms or complex transformation logic.

Complexity: Involves multiple authentication layers or advanced orchestration (Kafka, VPN, custom endpoints).

Effort: 80+ hours.

Example: Integrating data from Genesys, Zendesk, and internal databases through custom scripts and mappings.

Collaboration and Project Workflow

When engaging with Birdie’s Professional Services for ingestion projects, the collaboration process typically follows these stages:

Discovery & Scoping

Define data sources, field mappings, and compliance constraints.

Identify ingestion method(s) and potential custom requirements.

Effort Estimation & Proposal

Birdie’s team estimates the complexity (S/M/L).

A detailed proposal and pricing estimate are shared.

Implementation & Validation

Connector or custom flow is built, configured, and validated with sample data.

Adjustments are made for transformation rules and data normalization.

Go-Live & Support

First ingestion run monitored jointly.

Birdie provides handover documentation and operational guidelines.

Decision Flow Summary

A visual decision flow can be included in your presentation deck or appended as a diagram:

Start → Identify Source Platform

Is it a commercial platform with an open API? → Native Connector

Do you need to join or anonymize data? → Data Lake / File-based

Do you prefer developer control with API access? → REST Ingestion API

None of the above or multi-source orchestration required? → Custom Professional Service

Last updated